How we integrated a pilot version of a legal diagnostic AI tool into the online Intake Tool

Project Overview

Project background

To help people identify their legal problems, Justice Connect has partnered with Melbourne University to create a natural language processing (NLP) AI, which can diagnose legal issues from someone’s own words. The next step of this project was to test it in the online Intake Tool (Intake Tool), where people can make applications for legal help.

The challenge

We needed to integrate the initial version of the AI model into part of the Intake Tool as a pilot to test its effectiveness in the real world.

My role

- Work with a small team of cross-functional skills, including the project manager, developer and lawyer.

- Design the screens, including sketching, wireframes and prototypes. Organise usability testing, and plan for how we would measure the success.

Solution

Our solution was to A/B test by adding a new “AI-First” pathway into the “Program Sorter”. 25% of users would get the AI-First pathway allowing us to compare the data to those using the standard pathway directly.

Problem and process

Legal areas are complex and confusing

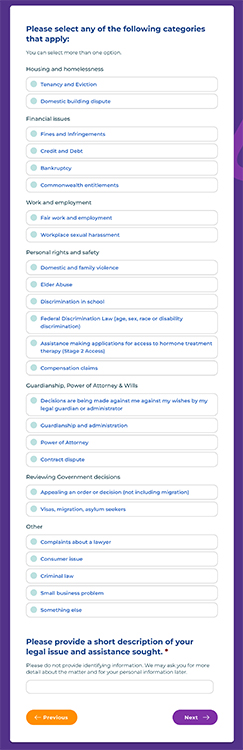

Previous research by Justice Connect had shown that “…41% of Justice Connect online users identified as unable to categorise their legal issue. We also found evidence of very high inaccuracy rates amongst those believing they applied a correct category.”

When going through the Intake Tool users get presented with up to 25 legal categories to select their problem from. Quote from user: “It’s complex and I’m not an expert!” and “[I’m unsure of the category] because of the family relationship [issue] together with financial issue.”

This is a problem because:

- This leads to wasted time applying for inappropriate services

- It might be too complex and cause people to give up

- Compounds already stressful scenarios

- This leads to staff rejecting a lot of ineligible applications

- Users may miss applying for the correct service

AI might be able to help people identify their legal problem

This AI aims to diagnose legal problems within a person’s written or spoken description. Of course, the law is complex and subjective, so the AI cannot be 100% accurate all the time, but it can help point a person in the right direction, hopefully leading to improved confidence in those seeking legal help. This project would be the first step in integrating AI into the Justice Connect eco-system and an opportunity to get data to see the effectiveness and identify problems.

Constraints:

- Our team and a supplier had limited capacity, causing several delays and rushing through some stages.

- We could not use real users for User Testing, so we conducted Usability Testing using other staff and some students.

- The Intake Tool suffers from technology debt and needs a lot of work, but making changes that didn’t directly affect this project was out of scope.

Process

- Where in the flow of the tool to integrate

- How to handle various scenarios

- Designing the new screens

- Results so far and next steps

- What I learnt

Where in the flow of the tool to integrate

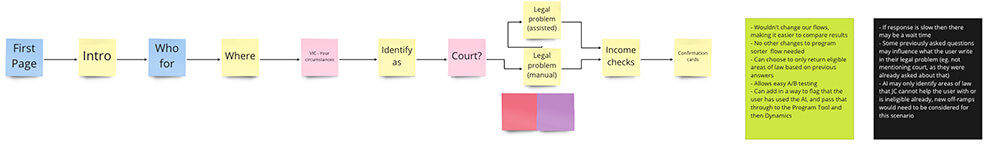

The Intake Tool comprises several parts, including what we call the Program Sorter, and then several tools for each service offered at Justice Connect. The Program Sorter is often the first step for most users and helps direct them to apply for the correct service(s). The AI concept initially revolved around integrating into the Program Sorter. So then we needed to decide where within the flow.

After looking at the options of where in the flow to integrate the AI, the decision was made to add it at the same point as the current manual option.

This offered several advantages:

- It wouldn’t change our flows, making it easier to compare results

- No other changes to the Program Sorter flow would be needed

- Allows easy A/B testing

- It would be straightforward to add in ways to track if the AI was used and pass that data through to our CRM

And a couple of potential issues:

- If the API response is slow, then there may be a wait time

- Some previously asked questions may influence what the user writes in their legal problem (e.g. not mentioning court, as they were already asked about that)

- AI may only identify areas of law that JC cannot help the user with or is ineligible already. New off-ramps would need to be considered for this scenario

What we weren’t changing

The decision had been made that we would make as little change to the Program Sorter as possible, including adding no new categories. The only differences were the new screens to handle the AI pathway. The categories the user could get would be the same, just presented differently. That meant I could focus on spending my time designing the screens and the experience.

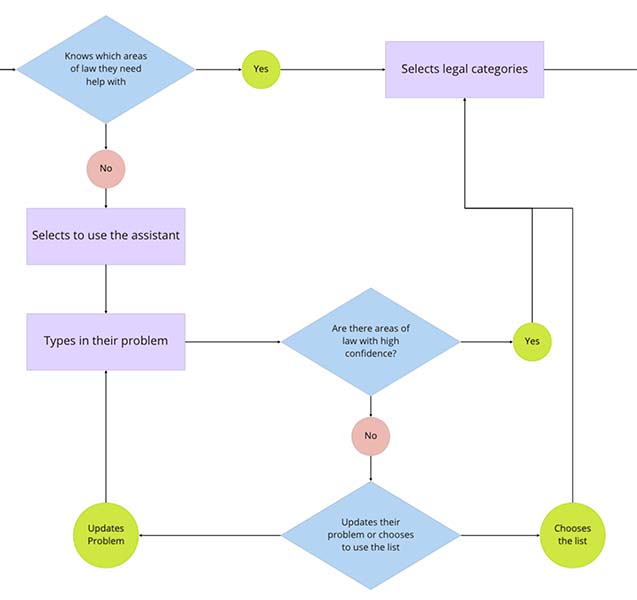

This user flow was settled on for the integration. Both pathways would lead to the user selecting categories, but that page would vary in the 2 pathways.

Designing and testing the new screens

After some rough sketching and mockups, we quickly iterated some designs until we got to a testable prototype.

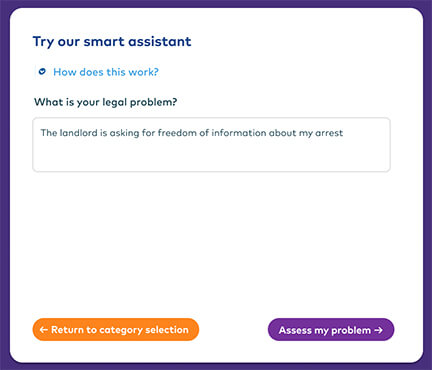

The page where users would type in their problem. The AI was nicknamed the “smart assistant”, with no official name.

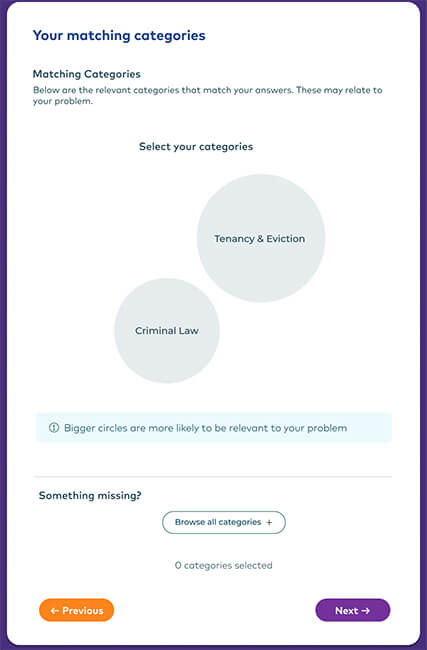

The matched categories page shows the categories that are matched to the AI results. This page also showed the AI areas of law results and the submitted problem.

We cut back out what wasn’t working

After some usability testing with other staff, we realised that some of the features of the page, which we thought were helping to support the user, were confusing. So we went to the cutting floor and tested the updated prototype with some students and validated our design, which could now go into development.

The updated matched categories page with much less information.

But users weren’t using the AI

Initially, we had the AI being opt-in, but after a month, only 6% of users had used it. We needed more data, so we changed it so that 25% of users got the AI pathway (now called “AI-First”), all users would get the option to switch pathways. After another week, we saw that 63% of users getting the AI-First pathway were choosing to switch to the old list option, so again we made an update, now not allowing those who got the AI-First pathway to switch—allowing us to get enough data.

Results so far and next steps

After 3 months of the pilot, we reviewed the data and saw results that indicated an improved user experience for those who used the AI.

- 8% less likely to abandon

- 12% less likely to select “something else”. Which we consider a (rough) measurement of confidence

- No change to the chance of being eligible; the numbers were the same if the user used the AI or not. Several other variables affect a person’s eligibility, so while this number hasn’t improved, further work on the entire intake process supported by the AI should improve this.

We did notice a couple of potential problems with the user experience based on the data:

- Some users getting 10+ suggested categories

- Some users get no categories, even when the AI returns a result.

- Some users are doing an income check (which is an eligibility criteria for much of our services), even when they are ineligible

Since then, we’ve dived deeper into that data, identified the core problems, ideated and devised solutions and implemented them. It is too early to analyse data to see these updates’ net effects.

What I learnt

For various reasons, this project was messy, so learning to wade through the mess and still come out with the result that was validated and then quickly able to iterate around issues was a big success for me.